Research @ ARC

Our Projects

Final Year Projects

Final Year Projects

FYP FAQ

Q: I am interested in Robotics. How do I do a FYP related to Robotics?

A: We are currently not accepting any new FYP students since the semester has started. Meanwhile, you can join our projects under a different module/programme such as UROP or IWP by contacting Prof Marcelo at mpeangh(a)nus.edu.sg. Alternatively, you may wait for the next round of FYP selection process.

Q: Is there a deadline for applications?

A: Yes, the deadline depends on your home department or any other programme you are under and this have been made known to you commonly via email. We also have an internal deadline of 15th May 2020. Additionally, note that the topics here are allocated on a First-Come-First-Serve basis so please contact us ASAP if you are interested in a topic.

Q: Are the topics listed for individual FYP? Would I be working alone for these projects?

A: It depends on the topics but most topics here are part of a larger project. This means that you will be working alongside other staff researchers and students in the projects. However, FYPs are graded at an individual level.

Q: Are the FYPs limited to the specialisation students or FoE students only?

A: No. We welcome all students.

Q: I am really interested in a specific field in robotics which is not listed here. Can I self-propose my own topic?

A: Please contact Prof Marcelo at mpeangh-spamblocktag-(a)nus.edu.sg.

Q: My question is not listed here. Is there anyone I can ask?

For further inquiries, email robotics-spamblocktag-(a)nus.edu.sg.

Collaborative Robots and Manipulator Arms

Collaborative Robots and Manipulator Arms

Mobile Manipulator

The combined manipulator arm and base platform can be operated autonomously and remotely to assist human in collaborative environment. The manipulator arm is a Kuka (LBR iiwa 14 R820) with 7 degree of freedom (DOF) mounted with Robotiq 3 finger gripper. The base platform is omnidirectional with zero radius turn capabilities, configured to be highly maneuverable in order to cater the different workspace environment. The base system can also be mounted with front and bottom camera for obstacle/object detection. The setup is being used in exploration of Human Robot Interaction for workshop environment, under grant from A* research.

Autonomous Mobile Robots

Autonomous Mobile Robots

Indoor Self-Driving Vehicles

Current research outcomes of self-driving vehicles (like cars, buses and trucks) are largely implemented for public open roads. These high-speed autonomous navigational application use cases generally are still governed by highway codes and rule-based driving regulations.

Within indoor commute of pedestrians at high-density, where people move in a more organic and spontaneous pathways along corridors and at times even cross-path or against human traffic flow, autonomous navigation and travel will pose a different set of challenges. In addition, indoor corridors could be narrow and include obstacles, an Autonomous Wheelchair to effectively and efficiently able to make this user trip, will require enhanced sensory fusion perception capabilities to better achieve its advanced path planning and localization processes.

The targeted research outcome is achieving a socially graceful navigation in pedestrian (non-road) environments using multiple sensory and fusion technologies and AI (i.e., Deep Learning). New sensory fusion methods, localization and navigation techniques will be demonstrated.

Furthermore, navigating in a socially acceptable manner is an increasing demand by the public; therefore, the social science observation of human-robot interaction between, will be even more required for applicational use. Finally, narrow corridors and crowded and dynamic environments will need beyond current and even next-gen sensory technologies to navigate in such tight spaces.

Autonomous Vehicles

Autonomous Vehicles

Self-Driving Buses

This project aims to develop the perception stack for the self-driving bus. The 12-meter bus should be able to perform typical feeder services autonomously. The focus of the perception module we are developing for this autonomous bus aims to detect and track all dynamic actors in the environment and predict the future location of each actor. Using the predicted future positions of the obstacles, the bus is then able to plan safer and more comfortable paths.

This project is done in collaboration with ST Engineering Land Systems.

Soft Robotics

Soft Robotics

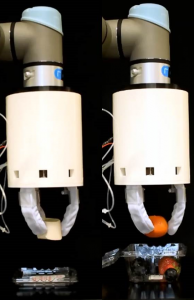

Soft End-Effectors

A sensorized robotic manipulator system is developed for

(i) agriculture such as fruit and vegetable picking and sorting,

(ii) food packaging such as but not limited to cakes, fruits, pies, etc.,

(iii) advanced manufacturing such as handling glassware, electronic components, etc., and

(iv) service robots.

It provides safe, compliant and efficient grasping which leads to improvements in grasping performance of tasks deemed previously to be too delicate for traditional robotic end-effector.

Soft Robotics

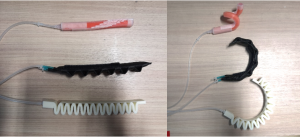

Soft Actuators

The figure on the left shows some examples of soft actuators. The top one is made from silicone elastomer; the middle one is made from nylon fabrics; the bottom one is made from 3D printing. These actuators are driven by pneumatic power. When certain pneumatic pressures are applied to the prototypes, they can have different actuation profiles. The top actuator has a helix shape and the other two actuators bend in a plane.

Marine Robotics

Marine Robotics

Unmanned Surface Vehicle (USV)

The USV (BBASV 2.0) can operate independently and cooperatively to perform tasks remotely and autonomously on surface water. The motors are placed in vector configuration for holonomic drive, thus providing the vehicle with 4 degree of freedom (DOF). It also has a Launch and Recovery System (LARS) that allows for autonomous deployment of AUV from the ASV, allowing them to work in tandem. The vehicle is made to withstand up to Sea-state 3. The system of the vehicle can be easily configured to cater the specific needs of different stakeholders. The NUS Bumblebee USV team participated in the 2018 Maritime RobotX Challenge in Hawaii and emerged as Champions.

Marine Robotics

Autonomous Underwater Vehicles (AUV)

The AUV can be operated autonomously and remotely to perform tasks in the water with 6 degree of freedom (DOF), up to 100 meter deep. It has a front facing and bottom facing camera, along with a front facing sonar for long distance object detection. It navigate using its on board Inertia Measurement Unit (IMU), Doppler Velocity Logger (DVL), and Acoustics System. The vehicle is highly modular to be configured in order to cater the different underwater challenges. The NUS Bumblebee AUV team achieved second position at the 2018 International Robosub Competition held in San Diego, USA.

ARC Research

Advanced Robotics Centre (ARC) is an interdisciplinary research centre, established jointly by NUS Engineering and NUS Computing, with a focus on human-robot collaborative systems.

The vision of ARC is to become a prominent centre of robotics research and a sought-after resource by the industry and the society in Singapore and the region.

The mission of ARC is to advance the state of the art in robotics research, and to develop novel robotic platforms and application areas with high impact in productivity and innovation in the industrial ecosystem and improving the quality of our lives.

Please click on the links in the sidebar to view our research projects.